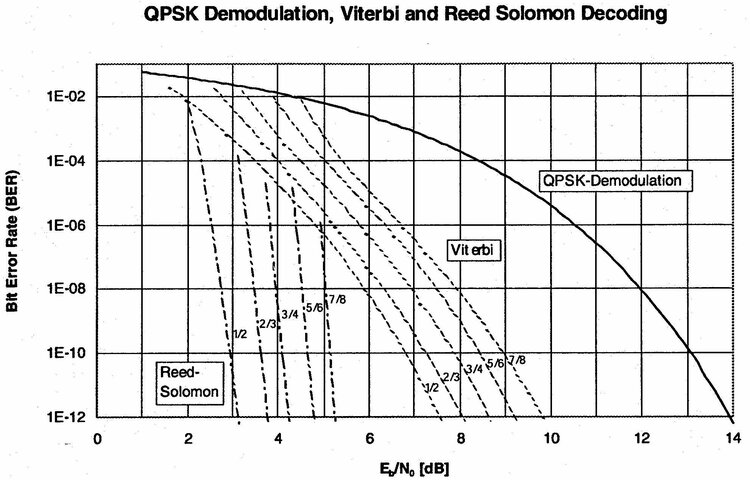

A bit-error-rate test at the receiver compared with the original signal would be the deciding factor. I doubt that any of us is equipped to carry out such a test.

Those who believe that no errors exist in digital TV signals must be smoking something. Of course there are errors. When they reach a certain level, the receiver will shut down. The bit error rate rises as the signal strength decreases until an unacceptable threshold is reached. That threshold is high enough and bad data is spread widely enough in the picture to go unnoticed by viewers.

Live TV doesn't allow for error checking and retransmission the way sending data over the Internet does. The erroneous data is simply used as long as the error threshold hasn't been reached.

Any time an airplane flies through the line-of-sight of the dish-to-satellite link, receivers should be shutting down all over the neighborhood. They don't because the systems accept a certain amount of bad data.

Those who believe that no errors exist in digital TV signals must be smoking something. Of course there are errors. When they reach a certain level, the receiver will shut down. The bit error rate rises as the signal strength decreases until an unacceptable threshold is reached. That threshold is high enough and bad data is spread widely enough in the picture to go unnoticed by viewers.

Live TV doesn't allow for error checking and retransmission the way sending data over the Internet does. The erroneous data is simply used as long as the error threshold hasn't been reached.

Any time an airplane flies through the line-of-sight of the dish-to-satellite link, receivers should be shutting down all over the neighborhood. They don't because the systems accept a certain amount of bad data.