I'd assume the former. Stations were provided receivers.Now why is it you think that given all this time some stations have not upgraded to DVB-S2, is it just plain procrastination or money issues?

PBS mux on 125W moving around...preparing for changes

- Thread starter Mr Tony

- Start date

- Latest activity Latest activity:

- Replies 232

- Views 38K

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Please reply by conversation.

It's no more bit-starved than any other broadcast network. ATSC requires MPEG-2, so that's what PBS/ABC/NBC/FOX/CBS/CW use.

H.264 has been part of the ATSC standards since 2008. Broadcasters could use H.264 over ATSC if they wanted to, but they'd rather accommodate stubborn people who don't want to update their equipment to handle a codec that is not 16 years old, so we all must suffer with MPEG-2 because of people with MPEG-2 only equipment dragging us down. The only way to bypass the quality problem is to bypass the middlemen who are ruining the quality of our television in the first place; the network affiliates.

And yes, while the situation does seem to be deteriorating amongst the broadcast networks, most NBC affiliates are around 15 Mbps nowadays; there's many full bitrate 16+ Mbps CBS affiliates around; and the way Fox is distributed most, if not all Fox affiliates should be 720p @ 14 Mbps. CW varies wildly. And we all know that ABC affiliates are terrible, typically 8-12 Mbps @ 720p. The only network which manages to routinely look worse than PBS.

Using MPEG-2 has nothing to do with dumbing things down for noob viewers. It's required. "Halving" (720p actually has more pixels/second than 1080i) the resolution to 720p is actually not a bad idea if you can't allocate enough bits to do 1080i properly (for some definition of "properly").

Again. It's not required. They could leave all the viewers with equipment that can only handle MPEG-2 in the dark if they wanted to. They would rather cater to the lowest common denominator, however. I refer to these LCDs as "noob viewers."

720p does not have more pixels/second than 1080i. You pulled that one out of thin air. 1080i broadcasts at 60 fields per second with each field consisting of 1,036,800 pixels. These fields are then de-interlaced on the fly by your equipment to 1080p video @ 30 frames per second with 2,073,600 pixels to a frame.

720p broadcasts at 60 frames per second with each frame consisting of 921,600 pixels. That's it. And most sources used aren't even true 60 frames per second; many 720p broadcasts are actually using sources shot at 29.97 and the frames are then duplicated to 60 for 720p60 broadcast

PBS actually did tests showing that even when stations broadcast MPEG-2, switching distribution to MPEG-4 gave a measurable improvement. IIRC, those tests used MPEG-2 at ~13Mbit/s.

Most PBS affiliates run at 11 Mbps. It's pathetic. That disturbingly low bitrate will introduce enough artifacts of its own that it diminishes any quality that may be gained by having a higher source material.

Last edited:

This post is so full of ignorance, I'm not sure where to start.

1920 x 540 x 60000/1001 = 62145854 pixels/sec

720p has more temporal resolution. 1080i has more spatial resolution. Fox and Disney/ABC/ESPN chose 720p because of sports. By the time you account for kell factor (which I was) and the coding inefficiency of interlaced MPEG-2 (relative to progressive), 1080i doesn't always have the obvious advantage you seem to believe it does. It's highly unlikely your TV has a better deinterlacer than your local station. Even if it did, it would be downstream their horrible, antique relic of an MPEG-2 encoder. For a telecined source (film), 1080i is certainly superior.

Congratulations on being the 1% (of TV snobs).

Remember that digital conversion thing that took >10 years? Where Congress had to push the deadline multiple times and subsidize tuners so that NTSC could end? You expect to repeat that process barely three years later and make OTA viewers buy another new TV? Just because ATSC added AVC in A/73 doesn't mean anyone supports it. A/53 also has a 16VSB mode that nobody uses. The FCC incorporated A/53 only (and not even the newest version).H.264 has been part of the ATSC standards since 2008. Broadcasters could use H.264 over ATSC if they wanted to, but they'd rather accommodate stubborn people who don't want to update their equipment to handle a codec that is not 16 years old, so we all must suffer with MPEG-2 because of people with MPEG-2 only equipment dragging us down.

Certainly no broadcaster is going willingly lose viewers by switching to AVC. The FCC mandate for DTV is to replicate NTSC service, so there would still have to be an SD MPEG-2 program in the mux anyway. Your dream of 18 Mbit/s AVC will never happen. Wait for ATSC 2.0 or 3.0.Again. It's not required. They could leave all the viewers with equipment that can only handle MPEG-2 in the dark if they wanted to. They would rather cater to the lowest common denominator, however.

1280 x 720 x 60000/1001 = 55240759 pixels/sec720p does not have more pixels/second than 1080i. You pulled that one out of thin air.

1920 x 540 x 60000/1001 = 62145854 pixels/sec

720p has more temporal resolution. 1080i has more spatial resolution. Fox and Disney/ABC/ESPN chose 720p because of sports. By the time you account for kell factor (which I was) and the coding inefficiency of interlaced MPEG-2 (relative to progressive), 1080i doesn't always have the obvious advantage you seem to believe it does. It's highly unlikely your TV has a better deinterlacer than your local station. Even if it did, it would be downstream their horrible, antique relic of an MPEG-2 encoder. For a telecined source (film), 1080i is certainly superior.

Given a fixed output bitrate, a better input will yield better output. It's irrelevant that the output is 11 Mbit/s (pathetic or not). CBR at that rate is crap, but maybe not if that's the long-term average within a statmux.Most PBS affiliates run at 11 Mbps. It's pathetic. That disturbingly low bitrate will introduce enough artifacts of its own that it diminishes any quality that may be gained by having a higher source material.

Congratulations on being the 1% (of TV snobs).

30fps stutters too much (especially on a CRT). Any respectable TV will bob 1080@30i to 1080@60p.

1080i broadcasts at 60 fields per second with each field consisting of 1,036,800 pixels. These fields are then de-interlaced on the fly by your equipment to 1080p video @ 30 frames per second with 2,073,600 pixels to a frame.

(720p actually has more pixels/second than 1080i)

Xizer said:720p does not have more pixels/second than 1080i. You pulled that one out of thin air.

1280 x 720 x 60000/1001 = 55240759 pixels/sec

1920 x 540 x 60000/1001 = 62145854 pixels/sec

Thanks for backing up my statement?

Also, I had to laugh at this:

It's highly unlikely your TV has a better deinterlacer than your local station.

Perhaps not, but I don't use my TV's deinterlacer. All my content is viewed after being rendered by my PC first.

"Professional" grade equipment is highly overrated. It never ceases to amaze me when I see stations dropping tens of thousands of dollars on proprietary equipment that actually does an inferior job of processing content compared to what my consumer-level PC can do.

I'd bet $1,000 that my consumer level graphics card does a better job of de-interlacing content than whatever machine my local affiliates would use.

it looks like those feeds with the symbol rate of 4444 went DVB-S2. At least they were last night (the 12175 H 4444 was still DVB)

crazy thing.....the Micro didnt even need a rescan. It just changed over (and the signal is alot lower on those than when they were DVB)

crazy thing.....the Micro didnt even need a rescan. It just changed over (and the signal is alot lower on those than when they were DVB)

Yep, I'm getting DVB-S2 on those except for that one feed you mentioned. Quality still seems the same here, at 71% ... if it is lower, it's only by a few percentage points (I can't remember if those were at 75 or 71 for me before)

it looks like those feeds with the symbol rate of 4444 went DVB-S2. At least they were last night (the 12175 H 4444 was still DVB)

crazy thing.....the Micro didnt even need a rescan. It just changed over (and the signal is alot lower on those than when they were DVB)

I noted the same here. micro just auto fixed it.

Hello, Since I just got my 125 West back up, can someone please adivse me what transponders are active. I know their moving things around up there. Did a scan and only came up with 12140, pretty strong though. I am just checking. Thanks!

everything is still up there as of today

vertical side

12106 V 2397** Montana

12114 V 8703** Oklahoma

12060 V 30000

12140 V 30000

12180 V 30000**

Horizontal

12150 H 14028

12163 H 4444**

12169 H 4444**

12175 H 4444

**-DVB-S2 transponders

vertical side

12106 V 2397** Montana

12114 V 8703** Oklahoma

12060 V 30000

12140 V 30000

12180 V 30000**

Horizontal

12150 H 14028

12163 H 4444**

12169 H 4444**

12175 H 4444

**-DVB-S2 transponders

Thanks so much Iceberg!

everything is still up there as of today

vertical side

12106 V 2397** Montana

12114 V 8703** Oklahoma

12060 V 30000

12140 V 30000

12180 V 30000**

Horizontal

12150 H 14028

12163 H 4444**

12169 H 4444**

12175 H 4444

**-DVB-S2 transponders

Iceberg,

Got them all. Thanks! I needed to go back out and make better adjustments. I may have to go back out once I receive my new Geosatpro SL1PLL and fine tune!

and fine tune!

Got them all. Thanks! I needed to go back out and make better adjustments. I may have to go back out once I receive my new Geosatpro SL1PLL

Well they FINALLY did the changeover.....sort of

12150 H 14028 GONE

12163 H 4444 DVB-S2

12169 H 4444 DVB-S2

12175 H 4444 DVB still

12060 V 30000

HD "feed".....black screen

SD "feed"....black screen

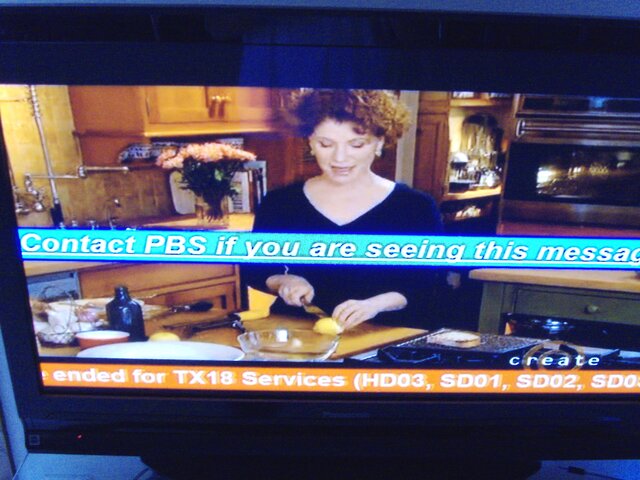

Create and V-Me has a scroll on the bottom about the channels leaving and a HUGE blue banner across the middle

World...black screen

Ironically PBS E & W HD (12140) is still up.

12150 H 14028 GONE

12163 H 4444 DVB-S2

12169 H 4444 DVB-S2

12175 H 4444 DVB still

12060 V 30000

HD "feed".....black screen

SD "feed"....black screen

Create and V-Me has a scroll on the bottom about the channels leaving and a HUGE blue banner across the middle

World...black screen

Ironically PBS E & W HD (12140) is still up.

Attachments

also there are now 2 DVB-S2 HD feeds replacing the 12150 H 14028 feed

12145 H 6250

12155 H 6250

12145 H 6250

12155 H 6250

I gave up trying to keep track of this until they finally turn whatever off for good. Then I'll scan the correct Tp's and be done with it lol...The banner running across the screen is annoying too.

I gave up trying to keep track of this until they finally turn whatever off for good. Then I'll scan the correct Tp's and be done with it lol...The banner running across the screen is annoying too.

Me too. I will miss them while they are gome. Wake me up when they come back.

:

:The banner running across the screen is annoying too.

Watch the mux on 12180 and no annoying banner.

Me too. I will miss them while they are gome. Wake me up when they come back.:

They are still there.

12180 V 30000

DVB-S2

PBS HD E, W, Create, V-Me and World

Bah! Can anyone with a Diamond 9000HD confirm whether or not they get 12180? This thing is driving me nuts...

- Status

- Please reply by conversation.

Similar threads

- Replies

- 9

- Views

- 501

- Replies

- 8

- Views

- 394

- Replies

- 9

- Views

- 453

- Replies

- 8

- Views

- 662

- Replies

- 1

- Views

- 845